llama4 돌려보기 (llama.cpp)

rtx4090, rtx3090x2 으로 총 72GB 구성된 환경에서 아래 모델을 돌려봄.

방법은 https://docs.unsloth.ai/basics/tutorial-how-to-run-and-fine-tune-llama-4 참고

unsloth/Llama-4-Scout-17B-16E-Instruct-GGUF/Llama-4-Scout-17B-16E-Instruct-UD-IQ2_XXS.gguf ./llama.cpp/llama-cli --model unsloth/Llama-4-Scout-17B-16E-Instruct-GGUF/Llama-4-Scout-17B-16E-Instruct-UD-IQ2_XXS.gguf --threads 32 --ctx-size 16384 --n-gpu-layers 99 --seed 3407 --prio 3 --temp 0.6 --min-p 0.01 --top-p 0.9 -no-cnv --prompt "<|header_start|>user<|header_end|>\n\nCreate a Flappy Bird game in Python. You must include these things:\n1. You must use pygame.\n2. The background color should be randomly chosen and is a light shade. Start with a light blue color.\n3. Pressing SPACE multiple times will accelerate the bird.\n4. The bird's shape should be randomly chosen as a square, circle or triangle. The color should be randomly chosen as a dark color.\n5. Place on the bottom some land colored as dark brown or yellow chosen randomly.\n6. Make a score shown on the top right side. Increment if you pass pipes and don't hit them.\n7. Make randomly spaced pipes with enough space. Color them randomly as dark green or light brown or a dark gray shade.\n8. When you lose, show the best score. Make the text inside the screen. Pressing q or Esc will quit the game. Restarting is pressing SPACE again.\nThe final game should be inside a markdown section in Python. Check your code for errors and fix them before the final markdown section.<|eot|><|header_start|>assistant<|header_end|>\n\n"성능은

llama_perf_sampler_print: sampling time = 59.52 ms / 1311 runs ( 0.05 ms per token, 22026.58 tokens per second)

llama_perf_context_print: load time = 14681.95 ms

llama_perf_context_print: prompt eval time = 189.86 ms / 220 tokens ( 0.86 ms per token, 1158.75 tokens per second)

llama_perf_context_print: eval time = 19427.52 ms / 1090 runs ( 17.82 ms per token, 56.11 tokens per second)

llama_perf_context_print: total time = 19829.90 ms / 1310 tokens| 항목 | 수치 |

|---|---|

| Sampling time | 0.05 ms/token (22,026.58 tokens/s) |

| Prompt eval time | 0.86 ms/token (1,158.75 tokens/s) |

| Eval time | 17.82 ms/token (56.11 tokens/s) |

| Total time | 약 19.8초 (1310 tokens) |

이정도면 scout을 쓸만할거 같다.

내침김에 openai 서버로 실행시킴

# 로컬에서 이미 llama.cpp (gpu) 버전으로 빌드 했으니

export LLAMA_CPP_LIB_PATH=/home/euno/git/llama.cpp/build/bin

git clone https://github.com/abetlen/llama-cpp-python.git

cd llama-cpp-python

CMAKE_ARGS="-DLLAMA_BUILD=OFF" pip install .python3 -m llama_cpp.server \

--model ./unsloth/Llama-4-Scout-17B-16E-Instruct-GGUF/Llama-4-Scout-17B-16E-Instruct-UD-IQ2_XXS.gguf \

--host 0.0.0.0 \

--port 8000 \

--n_threads 32 \

--n_ctx 16384 \

--n_gpu_layers 99 \

--seed 3407 \

--chat_format chatml같은 옵션이라 gpu 사용량은 같다

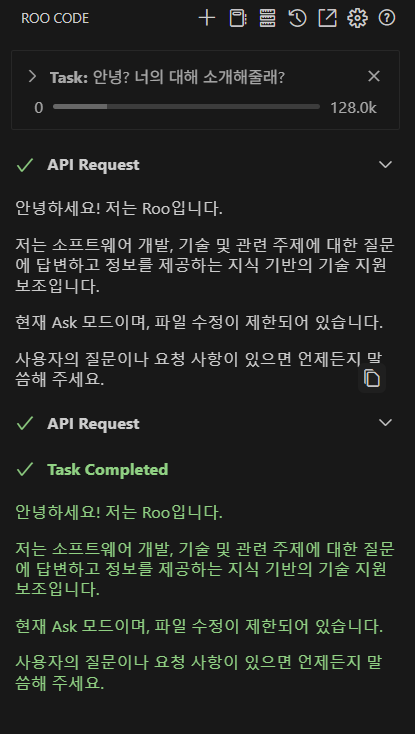

Agent도 잘 동작할지 테스트 해보니 문제 없다

온도와 전기세를 보니.... cursor 쓰는게 좋을듯 하다

--2025-04-24 추가--

Maverick도 잘 실행이 된다.

llama_perf_sampler_print: sampling time = 75.18 ms / 1062 runs ( 0.07 ms per token, 14126.85 tokens per second)

llama_perf_context_print: load time = 99937.80 ms

llama_perf_context_print: prompt eval time = 903.30 ms / 19 tokens ( 47.54 ms per token, 21.03 tokens per second)

llama_perf_context_print: eval time = 29317.80 ms / 1042 runs ( 28.14 ms per token, 35.54 tokens per second)

llama_perf_context_print: total time = 30531.93 ms / 1061 tokens비교

unsloth/Llama-4-Scout-17B-16E-Instruct-GGUF/Llama-4-Scout-17B-16E-Instruct-UD-IQ2_XXS.gguf

unsloth/Llama-4-Maverick-17B-128E-Instruct-GGUF/UD-IQ1_S/Llama-4-Maverick-17B-128E-Instruct-UD-IQ1_S-00001-of-00003.gguf

| Metric | LLaMA4 Scout | LLaMA4 Maverick |

|---|---|---|

| Sampling Time (ms) | 59.52 | 75.18 |

| Sampling Speed (tokens/sec) | 22026.58 | 14126.85 |

| Load Time (ms) | 14681.95 | 99937.80 |

| Prompt Eval Time (ms) | 189.86 | 903.30 |

| Prompt Eval Speed (tokens/sec) | 1158.75 | 21.03 |

| Eval Time (ms) | 19427.52 | 29317.80 |

| Eval Speed (tokens/sec) | 56.11 | 35.54 |

| Total Time (ms) | 19829.90 | 30531.93 |

| Total Tokens | 1310 | 1061 |

LLaMA4 Scout vs Maverick - 성능 비교 요약 (시간 기반)

1. Sampling Time

- Scout: 59.52ms

- Maverick: 75.18ms

🔹 Scout가 더 빠름

2. Load Time

- Scout: 14,681.95ms

- Maverick: 99,937.80ms

🔹 Scout가 압도적으로 빠름

3. Prompt Eval Time

- Scout: 189.86ms

- Maverick: 903.30ms

🔹 Scout가 약 5배 빠름

4. Eval Time

- Scout: 19,427.52ms

- Maverick: 29,317.80ms

🔹 Scout가 빠름

5. Total Time

- Scout: 19,829.90ms

- Maverick: 30,531.93ms

🔹 전체 처리 시간에서도 Scout가 우위